The Why of Artificial Intelligence

The science of Artificial Intelligence (AI) is an exciting field of research. For more than 60 years it has fascinated scientists in areas as diverse as: mathematics, computer science, psychology, neuroscience, etc. The progress made towards the objective of building intelligent machines has been enormous, and therefore we can say that the future of this field is fascinating and invites us to be surprised in the more immediate future.

The origin of the term Artificial Intelligence can be found in 1955, when it was described as:

The science and engineering of making machines that behave in a way that we would call intelligent if the human had that behavior.

John McCarthy

-

The concept of Artificial Intelligence

The term intelligence was initially associated with the human mind, but there is a broader conception of intelligence that accommodates the activities of living organisms such as ants when, for example, they cooperate to collect food, or the way in which natural selection occurs in living beings. Thus, there are two strands called strong AI and weak AI. The first responds to the creation of "machines with mind" that some scientists would argue as the first objective of research, and the second to the development of "machines with intelligent behaviours", computer systems with different capacities or behaviours associated with "intelligence".

At the beginning of the eighties artificial intelligence emerges again as a scientific field that attracts attention again, already as a more mature and cautious field of knowledge, far from the initial expectations and the abstract persecution of intelligence. At this time the rise of expert systems takes place, artificial systems oriented to the practical application in concrete problems by means of approximate reasoning procedures with knowledge of problem. It is also the decade in which important research areas such as artificial vision, automatic learning, computational intelligence, etc. are consolidated.

In subsequent decades, the duality between systems that act rationally versus systems that think like humans is there and advances are made in the processing of huge amounts of information and in the reasoning capacity of artificial systems.

-

Artificial Intelligence in Literature, Film and Television

We all have a concept of what Artificial Intelligence involves due largely to science fiction that feeds the minds of both citizens and scientists. Whether through literature, film or television, we all have a conceptualized future that is mediated by machines. In fact, we cannot compromise and say who inspires the scientists who make advances in research, whether the technology we already have in use and want to improve, or the Hollywood scriptwriters who surprise us year after year with multimillion-dollar, realistic productions of stories that will happen.

Literature in science fiction has not only allowed innovations such as the submarine to seem natural to us, but has also fed the imagination of readers, with the idea of beings capable of reasoning and having intelligent behaviors, something close to AI. In the work of the English writer Mary Shelley "Frankenstein or, The modern Prometheus" (1818), considered the first book of science fiction, Dr. Victor Frankenstein created a humanoid that provokes fear, tenderness and reading about the behavior and conception of the character of Frankenstein who at the end of the story became a murderer, rebelling against its creator.

This strong conception of AI is also present in films such as "2001: An Odyssey of Space" (1968), with the computer HAL 9000, capable of responding to any demand because it understands natural language without difficulty. In this sense, two major areas of research are Natural Language Processing (NLP) and Computation with Words (CW).

The protocol of communication between humans is natural language. Human beings communicate with each other and express their personal or emotional states through oral or written language. In addition, language can be considered as the projection and concretion of human knowledge and intelligence. Therefore, natural language is the appropriate source of data for understanding human beings, representing their knowledge and communicating with them. However, natural language is unstructured data and cannot be used directly by a computer system. There are methods based on neural networks (Deep Learning) for the extraction of linguistic characteristics of quality from written statements, which will not be constrained by a superficial interpretation of the text, such as the representation based on a bag of sentences or words, but will include the syntactic structure of the text. The usefulness of applying these linguistic characteristics, for example, would be the development of a system for classifying opinions, an essential element of the task of Opinion Analysis in social networks. In addition, it will show how to develop a system of classification of opinions based on the use of neural networks.

Word computing is a methodology that has more than forty years of scientific results and that defines and defends the possibility that computer programs know how to handle words instead of numbers, operate with them and give computational results expressed with words. Thanks to this conception of algorithms, computers would be closer to a human being's way of thinking and reasoning. Humans do not need exact measurements, since our intellect is made to understand concepts such as that building is very high, without the need to have the real data of the meters of height of the building. The existence of mathematical tools such as fuzzy sets has allowed it to be coherent to be able to express inaccurate or imprecise values in computer systems.

Thus, we define Computational Intelligence as an area within the field of artificial intelligence that focuses on the design of intelligent computer systems that imitate nature and human linguistic reasoning to solve complex problems.

Returning to cinema, Cris Columbus' film "The Bicentennial Man" (1999) is based on the novel "The Positron Man" by Asimov and Robert Silverberg. In this film, NDR robot "Andrew" maintains a constant struggle (more than a hundred years old) with the governmental system of his time to become considered a human, and links different issues that have been the subject of continuous debate of humanity: slavery, prejudice, love and death. They plan a future in which the prostheses and facilities used by humans to keep from aging are as much closer as Andrew's updates to be more human.

Steven Spielberg's film "Artificial Intelligence" (2001) is set in the 21st century. A company creates robots that resemble a child and show love to their human possessors, as a substitute for the lack of children in that society. David is the robot protagonist who maintains a rivalry with the human son of the family to which he is assigned during a period of suspended animation of the couple's son, Martin, while seeking a cure for his illness. David has the ability to love as well as hate, creating a situation of conflict with Martin in the struggle to receive his mother's love.

Let's remember again "2001: An Odyssey of Space" and his computer HAL (check that if you add a position to each letter the initials IBM appear). This conception of dialogue and understanding between a computer and a human has also been brought to the screen with the film "Her" (2013). Here the intelligent device is represented by an operating system present ubiquitously because it is outside a machine and interacts with the users as a person capable of giving a rich conversation. Such is the sensation of company experienced by the protagonist of this film, who falls in love with its operating system feeling emotions as human as jealousy.

In the television series "EXTANT" (2014-2015) Molly Woods (played by Halle Berry), is an astronaut who returns from a 13-month solo mission in a space station and discovers that she is pregnant despite her infertility. With the help of her husband, a robotics engineer who has created her "son" Ethan - an android with artificial intelligence who is being raised in her home and who presents fully humane behaviors typical of her age - Molly begins to discover that the company behind the funding of her husband's project may have something to do with her bizarre pregnancy.

Cinema continues to provide very feasible ideas of how our future can be. This is the case of the film Minority Report (2002), a film based on a short story written by Philip K. Dick and bearing the same name as the film. Despite its good billboard acceptance, it soon became famous for another reason: the way it imagined and predicted the future. Some of the technologies that appear in the film are a reality:

- Recognition of movements: The main character, actor Tom Cruise, uses his hands to interact with the software system. Already with the birth of tactile mobiles the use of hand gestures greatly facilitated the use of these devices. For example, a multi-touch interface allows us to turn from page to page in an electronic book in our tablet with the same gesture that we would use with a paper book, or close an application by pinching three fingers. However, since the birth of Microsoft Kinect (2009) and Leep Motion technology (2010) the gestural interfaces are already three-dimensional.

- Facial and ocular recognition: In the film it is used to identify individuals at a governmental level (such as an ID card) and for contextual and personalized advertising (recommendation systems, such as weak AI).

- Videoconferences: Nowadays with our mobile phones we can make video calls, but paradoxically we use more short audio and text messages from applications like WhatsApp. In fact, Skype was born right after this movie in 2003.

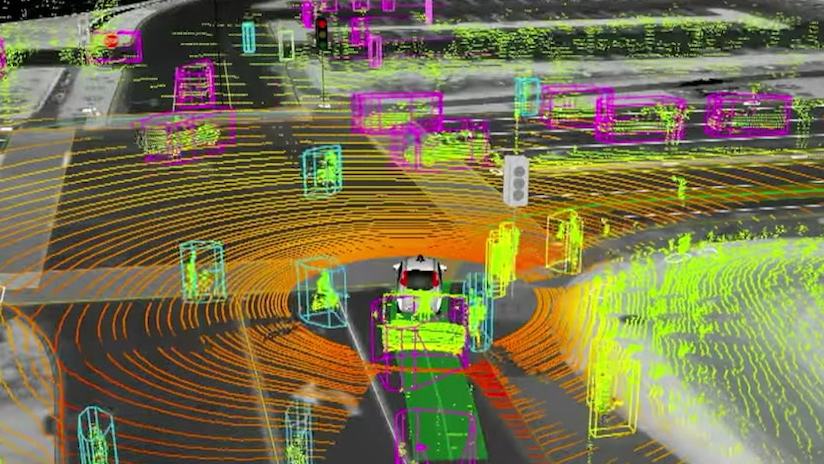

- Autonomous cars: In the automotive field we find countless examples of intelligent technologies that coexist with us in driving: sensors and cameras that show angles and distances when parking, assistants for parking both semi-automatic and automatic, and so on. And of course, highlight how from time to time we are seeing more and more progress in autonomous driving. Within a few years, it is said that around 2020 or 2025, autonomous driving cars will be generalized.

- Personalized advertising: Technologies such as Google's AdSense track our online behavior and then make suggestions and show advertising according to our wishes. Each time we enter Amazon, the searches in previous visits create a list called "Date a whim" from which it is often really difficult to escape. The large amount of data available today, together with the necessary tools for processing, make up what we know as Big Data. Everyday objects such as cars, watches or glasses are beginning to connect to the Internet to feed new services that require a constant exchange of information. Progress and innovation are not currently hampered by the ability to collect data but by the ability to manage, analyze, synthesize, visualize and discover the knowledge underlying that data. This is the challenge of Big Data technologies.

- Voice controlled homes: Google Home is Google's virtual assistant, but unlike the assistants of other companies it does not have an anthropomorphized nickname like Apple's Siri, Microsoft's Cortana or Amazon's Alexa, and is simply invoked with the company name Google. Smart speakers at home also pose new privacy challenges. Being permanently listening to all the conversations in the home, the risk of our privacy being exposed by a computer error, an attack or simply by a change in the conditions of the service, may be critical, but not alien, as it is a risk already existing with other devices such as laptops, tablet, mobile or smartwatchs.

- Electronic paper: Electronic ink is the technological base of devices such as the Kindle, which allow us to replace the paper with something much more comfortable to the eye (especially in low light conditions). It is true that color electronic ink is not yet commercialized, but time to time.

- Prediction of crime: Within the area of data science, there are data analysis techniques that improve the quality of knowledge extraction techniques (data mining) to obtain more and better information. Among the tasks that can be performed are the cleaning of inconsistencies, the detection and cleaning of noise, the imputation of lost values and the use of data reduction techniques to eliminate redundant or unnecessary data, which allow us to have a set smaller than the original and to improve the effectiveness of the algorithms of prediction and description. Low quality data can lead to predictive or descriptive models (data mining models) of reduced performance, and therefore must operate under the principle "quality decisions must be based on quality data". For the prediction of crimes, AI tools are composed of two parts. First there is a social network information collection system that also includes encrypted networks where criminals tend to communicate (Tor, Freenet or I2P). Once the relevant information is obtained, it is passed on to the predictive engine, which is the one that launches the result.

-

Artificial Intelligence in MonuMAI

The great technological impulse that we usually refer to as Big Data has revolutionized the business environment. For the first time in the history of AI there is a widespread demand for systems with advanced intelligence, equivalent to that of a human, that are capable of processing such data. Today we are seeing Big Data proliferate rapidly to cover all organizations and all sectors. For this we need machines to be able to learn from their own experience. The discipline of Machine Learning addresses this challenge.

At present, automatic learning can be considered as one of the areas in which advances will be very important in the coming years, as it can provide intelligent systems with the capacity to learn.

Neural networks are one of the most important automatic learning paradigms at present and are models of interest according to the following definition of computational intelligence:

Computational intelligence is a branch of artificial intelligence focused on the theory, design, and application of biologically and linguistically inspired computational models, with an emphasis on neural networks, evolutionary and bioinspired algorithms, fuzzy logic, and the hybrid intelligent systems in which these paradigms are contained.

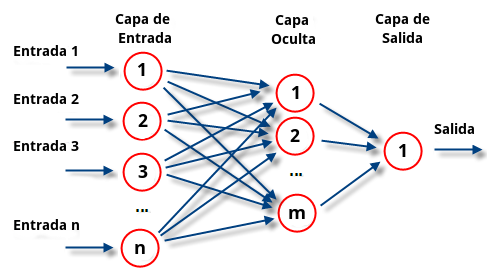

IEEEA neural network is proposed as an intelligent system that mimics the nervous system and the structure of the brain, but is very different in terms of its structure and scale. A brain is much larger than any artificial neural network, and artificial neurons are a very simple unit of computation as opposed to a biological neuron.

Artificial neurons also interconnect to form networks of artificial neurons. It uses a processing function that aggregates information from input connections with other artificial neurons, an activation function and a transfer function to output the neuron at its output connections.

Scheme of an artificial neural network.

Scheme of an artificial neural network.Neural networks are appropriate for applications where a priori model of a system is not known, but a set of input examples is available and the network learns from these examples to finally have a behavioural model. Applications can be developed as long as we have examples from which we can learn. Therefore, the application areas are multiple, such as voice recognition, image classification, signal analysis and processing, process control, as well as prediction problems, in the financial, atmospheric, biomedicine, experimental sciences, engineering, etc.

-

Our AI model

The MonuMAI app has as strong point its Artificial Intelligence component, making use of Deep Learning techniques will be able to recognize autonomously architectural styles present in the images that are synchronized with the system. The use of Deep Learning (DL) techniques in general, and Neural Convolutional Networks (CNN) in particular, allows the system to be autonomous in its task and improve with use.

The development of the AI component of the app is based on two pillars:

- An extensive and quality image database.

- A detection model based on a CNN. The database has been built using images of different Andalusian monuments selected from different Internet portals. The DL model for the recognition of architectural styles is based on an intelligent selective search technique combined with a CNN that represents the state of the art in detecting objects in images.

Artificial neural networks simulate the behavior of the human brain. They do this by means of a large number of artificial neurons that connect to each other and are organized in a hierarchical way. A model of artificial neural networks is capable of learning the distinctive characteristics of each object through the repeated viewing of a set of images of the same or several elements.

The knowledge that the model obtains during learning allows it to be able to use it to extrapolate this knowledge stored in neurons and their connections, giving it the ability to recognize, in our case, elements present in monuments through common characteristics such as form, texture, color, or context. This allows you to recognize elements in new scenarios never seen by the intelligent model.

- Want to learn more?